Listed in Gartner Hype Cycle of NLP Technologies - Neural Machine Translation

In Gartner's recent analysis on the risks and opportunities in adopting language technologies, they specifically mentioned our Neuronal Machine Translation (NMT) and praised our ability to tailor and adapt the NMT models according to our clients' requirements.

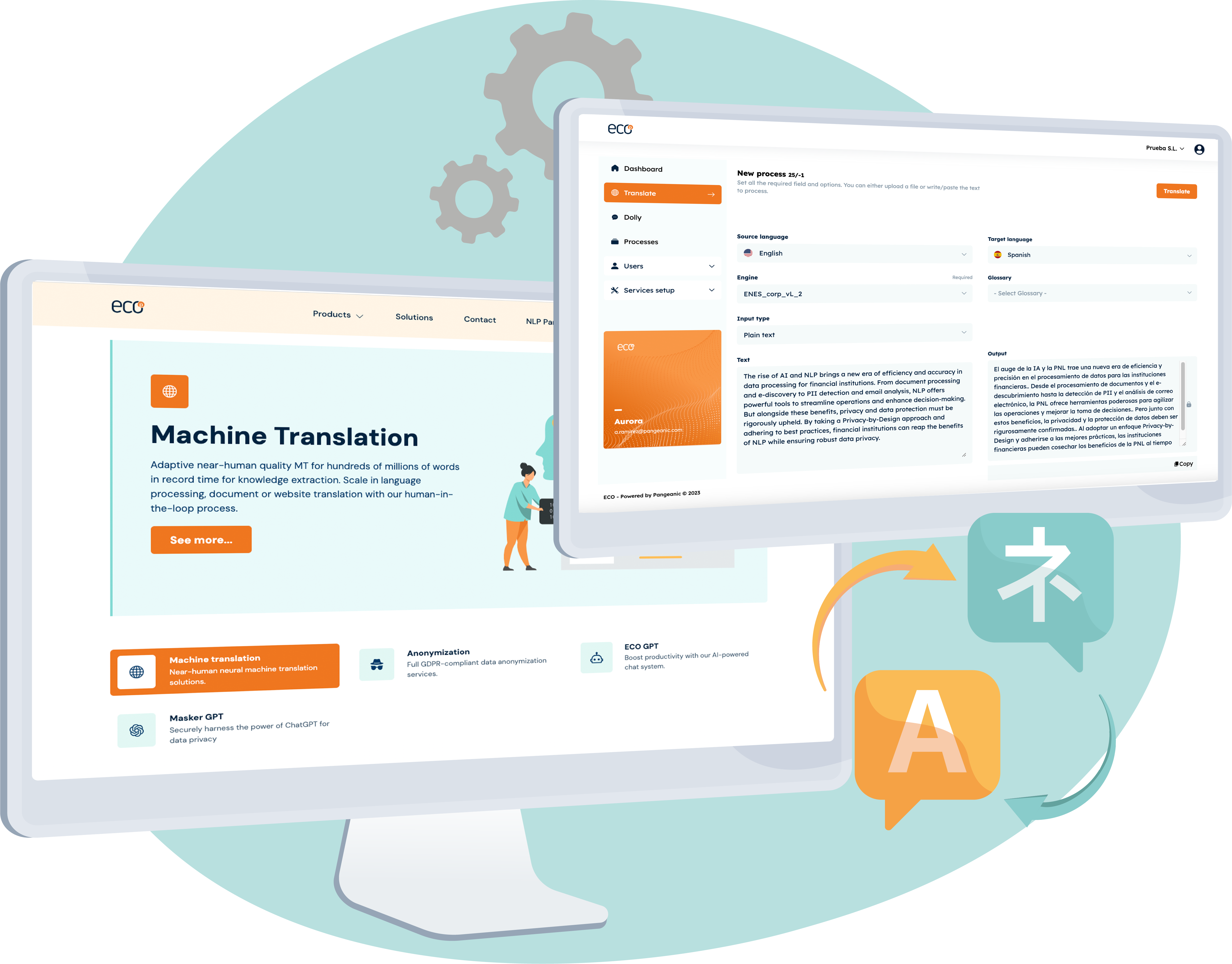

What can Machine Translation do for your business?

Integrating AI MT in your workflows is a couple of clicks away via our powerful API. Use your own content to create custom Machine Translation with your own terminology and expressions for your products and services.

PangeaMT adapts quickly to your style: choose on-the-fly or “after review” engine updates… or simply use one of the most accurate and private MT systems for business, government and enterprise.

Translate documents, emails, web content and any other communication quickly, accurately and into any language you need with privacy in mind. Add other NLP processes like text classification, PII discovery and anonymization or knowledge extraction.

Large volumes of text

250+ language pairs

Post-Editing

Terminology, tone and style adaptation

Saves time and money

In the cloud or on-premises

Instead of reading about it, try it!

Put Pangeanic's Machine Translation to the test and let technology do the work for you. With our free translation dashboard, you can experience the quality and accuracy of our Machine Translation developed by AI professionals and expert linguists from all over the world.

Join a community of users

Spanish IRS

With 25,000 registered users, the Spanish Tax Agency uses a private SaaS to translate legal and tax documentation, Intellectual Property, international claims with other tax authorities worldwide.

Veritone

Gartner-reviewed Nasdaq company offering one of the first AI Operating Systems. Serving Law Enforcement, Veritone clients often require a private environment for forensic evidence translation.

Omron

Machine Translation of technical product sheets, with full docx /pdf reconstruction.

IBWC

Fast Machine Translation API to translate within a CAT-tool environment (MemoQ). This Texas-based US government agency translates politically sensitive documents, hydrology reports and policies on water rights.

Pangeanic has been selected 5 times to provide machine translation services to successful EU projects such as Europeana Collection, GreenScent, create a grid of neural machine translation (NMT) engines for Public Administrations, etc.

What does Pangea Machine Translation offer you?

Translate any type of document

Whether you need legal contracts, technical reports, instruction manuals or extensive web content, our Machine Translation system is designed to handle any type of document, regardless of its format, complexity or size.

Imagine the benefits of being able to upload your documents to our platform and get fast and accurate translations in several languages, without changing your original layout.

Save time, resources and, above all, make your business more agile and efficient.

Translate into any language

If you need to communicate with foreign partners and customers or you are thinking of entering new international markets, PangeaMT is the perfect solution for you. Our system is designed to provide fast and accurate translations in a wide variety of languages and combinations.

Take advantage of new business opportunities anywhere in the world, regardless of the target audience's language.

Accurate translations for your industry

We understand that each industry has its own set of technical terms and specialized language requirements. That is why we pride ourselves on having a team of highly trained linguists who are familiar with the nuances and particularities of each field. Whether you need to translate medical, legal, technical, financial or any other type of documents, our Machine Translation guarantees impeccable accuracy.

PangeaMT is not an ordinary kind of Machine Translation; it not only uses advanced algorithms, but also combines this technology with the valuable experience of professional linguists to provide the terminology, tone and cultural references that your translation needs.

Total data security

Data security is a top priority for us. We understand how valuable our clients' confidential information and documents are, which is why we offer Machine Translation service in the cloud or in a private facility that meets all security and privacy standards.

Cheap is expensive. Do not think twice about hiring a professional service that will help your business succeed.

Typical Machine Translation usages

Legal texts (international litigation) and law firms

Financial institutions (banks, etc.)

E-commerce

Social networks

Governments and public administrations

Tourism, travel, hotel and hospitality businesses

Media and communication

Translation companies

Printing/publishing companies

Leading organizations that trust Pangeanic

.png)

Subscriptions to PangeaMT,

our Machine Translation service

Standard PangeaMT

12 months

€1,000

€100 monthly

- Pages per month: 100

- Words per page: 350 approx.

- Extra page: €1.2

- Formats: .txt, .doc, .docx, .pdf, .pptx

- Language pairs: 1

- Users: 2

- Generic Engine

- Technical Support

Professional PangeaMT

12 months

€2,000

€200 monthly

- Pages per month: 250

- Words per page: 350 approx.

- Extra page: €1

- Formats: .txt, .doc, .docx, .pdf, .pptx

- Language pairs: 2

- Users: 5

- Generic Engines

- Technical Support

Premium PangeaMT

12 months

€3,000

€300 monthly

- Pages per month: 500

- Words per page: 350 approx.

- Extra page: €0.8

- Formats: .txt, .doc, .docx, .pdf, .pptx

- Language pairs: 3

- Users: 10

- Trainable Engine: 2

- Customizable Glossaries

- Professional Tools

- API Access

- Technical Support

MyMT PangeaMT

12 months

My price

My pages

- Pages per month: upon request

- Words per page: 350 approx.

- Extra page: €0.5

- Formats: all

- Language pairs: upon request

- Users and Administrators: upon request

- Generic and Trainable Engines: upon request

- Domain Engine Creation: upon request

- Customizable Glossaries

- Professional Tools

- API Access

- Technical Support

*VAT not included

Our history

Pangeanic was at the forefront of Statistical Machine Translation systems and their development from the very beginning, in the early 2010s. It was the first company in the world to adopt and customize Moses, the first open source Statistical Machine Translation system, creating the first self-training platform (PangeaMT), a precursor to other modern architectures, such as ModernMT or attention models.

After a period of research collaboration with Toshiba, Pangeanic launched its PangeaMT v3. In 2017, Pangeanic adopted the Transformer-based architecture (Deep Neural Networks) and has been working on achieving human parity in Machine Translation systems ever since: this means Machine Translation models that can translate as well as humans. Since 2020, Pangeanic has published numerous academic papers renewing its concept of online learning (i.e., machines that learn as users translate).

Want to learn more about all our academic and R&D publications on Machine Translation systems and other areas of NLP? Click here to see our Google Scholar results.

Types of Neural Machine Translation

Pangeanic offers near-human quality Machine Translation for different applications

Cloud

- Standard engines for general translation (translating documents, sentences, etc.)

- In our private cloud infrastructure. Your data is kept safe in its own space or deleted after use.

- Standard engines that you can customize with your own data and build your own translation system in our cloud.

- Machine Translation API.

- Document translator.

In your own infrastructure

- Customized Machine Translation engines with your own or domain-specific data to grow and enhance your own translation system in your own infrastructure or cloud.

- Complete Machine Translation systems (corporate versions) in your own infrastructure.

- A branch of our own corporate version.

- Integrated systems (API)

- Document translator.